19 Aug 2025

As a spatial scientist, starting on Python was particularly interesting. The language is largely preferred for spatial data manipulation operations. At this point, I hadn’t delved Python to any great depth except encountering it in scripts at my 9 - 5 job, in esri software.

In this week’s Lecture, Shot and Section, Python is really dealt like rock skipping, touching the surface but, never sinking to depth. It was really about “How to Teach Yourself a New Programming Language”. Even with the Problem Set, the same was reiterated.

If I should gripe a little, every time the codespace had to be updated, I lost my theme - Cobalt 2. It however was not a pain getting things back to the way they were.

|

| Codespace My Way - A Dark Mode |

Learning a New Programming Language

It was not a struggle following highlights of differences between C and Python. What I had to contend with was ‘throwing away’ all the attention to detail and ‘pseudo-control’ over what the code was doing. Python is high level and I had to actively ignore the under the hood stuff I knew coming from C and ‘trust’ the computer to do the right thing. The compactness of Python code was a breath of fresh air!

Because with Python indentation matters, it sort of forced one to create clean code from the start. The CS50 coding environment screamed Problem, when a (tab) was missing.

After this brief run with Python, I found myself also asking the popular question Python or R. Because of the time I have invested in R for Data Science, I have developed some fondness for R. Curious about what the future holds.

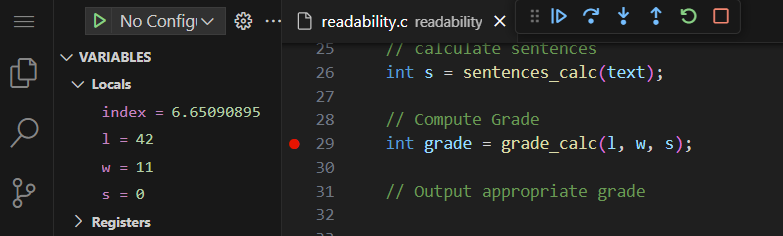

Revisiting Code

I grappled briefly converting my for loops from C to Python (sentimental-mario-less). But because I understood my code, with a little experimentation, I eventually got the code to work.

In one of the problems, I found myself using Debug50 to trace variable changes. The problem brief from C had been twisted from dealing with integers to now dealing with floating point numbers in Python. It was exciting experiencing first hand float point precision! The computer was working with 0.009999999999999967 whereas my expectation was 0.01. As a consequence, my function returned 0.0. With some research I employed round(change,2) which solved the problem. A successful debug, always scratches the inquirer’s itch.

I also found a bug in my older code which I had inadvertently gotten away with. It was with a word counting function. Occurrence of ‘.’ or space indicated end of a word but hadn’t factored where they occurred together viz. (dot)(space). I therefore ended up with an inflated word count.

Coding fatigue is a real thing. Even after six weeks of coding, tiny errors are still possible and fundamentals of debugging still have to be observed. This was after my program just hung on execution because I had missed code to increment a counter.

Reflections

Again I learn that every problem at first glance looks insurmountable. The problem set on DNA, felt overwhelmed on first encounter. But by applying modular programming, sub-tasking and pseudo-coding, I was soon on my way disintegrating it piece by piece.

I am looking forward to SQL and having to take a break from general purpose programming languages. The week-to-week tempo I adopted in the face of limited time makes everything feel like a rat race, albeit exciting, in the face of a weekend deadline.

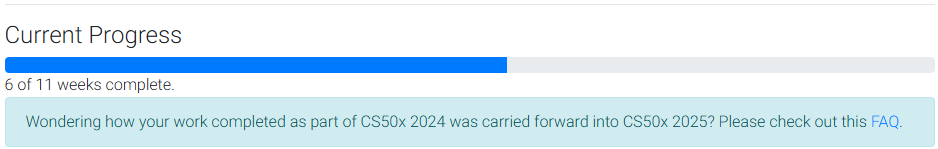

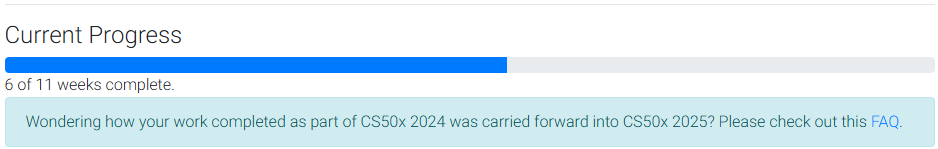

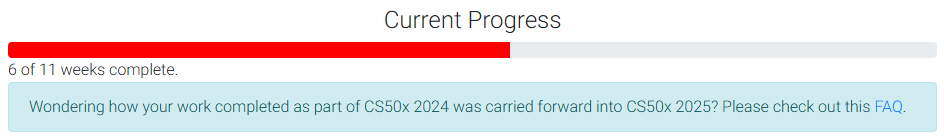

Let me put an end of post bar hereunder in the form of a progress bar, which surprisingly is blue from red at the moment.

|

| The Gradebook - Blue Zone Progress |

07 Aug 2025

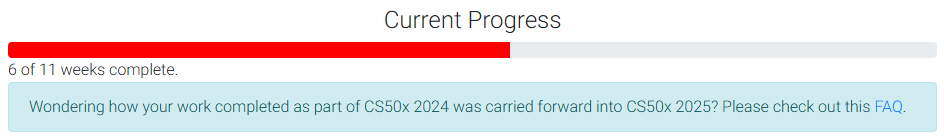

|

| The Gradebook - Looking at Progress |

So after submission of Week 5 Problem Set solutions, the above is my current progress. Now just a little over the halfway mark of the course. I foresee of course that the Final Project (FP) will take longer than a week. The week’s lecture material was quite comprehensible, relaxing almost. Dr Malan has a way of explaining complicated concepts from basic building blocks, from the ground up. The video clip aids, such as the t-shirts from a box and those from hangers, really drove home the concepts of Stacks, FIFO and LIFO.

The previous week on memory, was a bit intense as the code became more complex. Had the motivation for studying not been strong, Week 4 was a good time to quit. But, as I have learned from Learning Hard Topics, some topics are challenging like that. With the content of Week 5, It’s clear now that was necessary foundation. The week is a culmination of C and will veer off to Python in Week 6 (looking forward to that). It feels truly like a peak of the course and I am expecting to now plateau.

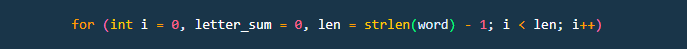

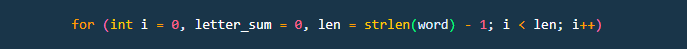

From so much use of loops and defaulting to ‘i’ as a loop variable I concluded that the i stands for any of - integer, iterate, initialise, index.

|

| The ‘i’ |

Being Practical with C

Going over the Inheritance (Problem Set 5), one really appreciates how everything learnt up to this point - assignment (now of pointers), arrays, memory, loops, conditionals, truth checking and more, ties together. The utility of the C Programming language is demonstrated through implementation of a practical biology ‘problem’ - determining permutations of Blood Type.

I couldn’t help pondering though, the experience by someone encountering this computer science material for the ‘first time’, in my limited time circumstance would fare. A week-by-week tempo, is manageable for someone who can spare a few hours a day delving the content and not necessarily a full-time worker without a burn of midnight oil.

What I learnt About Complex Problems

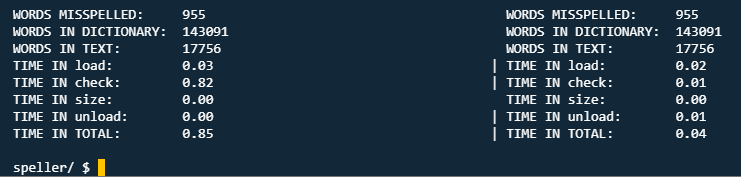

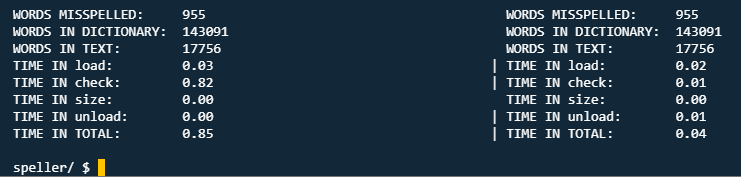

Problem Set 5, Part 2, Speller was the most challenging, yet, of the course. It has so many ‘moving parts’ i.e. functions and also incorporated a lot, if not all, of the concepts learnt so far. I perceive the objectives I set out at the beginning of the course are gradually being met. Some take-aways:

-

I learnt (again) and got reinforcement that breaking a problem into sub-problems should be a default approach when tackling a complex task. For each of the 5 functions to be completed, I could independent apply the most appropriate logic to each.

-

Benchmarking is a fascinating way of improving one’s code and algorithms. I found the exercise of improving the efficiency of my already functional code exciting. The Ideas of good program design, I was experiencing in practice.

-

The many functions and and code files other people had written which I had to work with, made me understand better the concept of code collaboration.

-

Walking away from a challenge and coming back later seem to be working for me versus banging my head on the keyboard until I get it. There are instances though where once you get a ‘scent’ to cracking the problem, you can’t detach until you get it done, then only walking away with the delight of success.

|

| Benchmarking - How good is your implementation? |

31 Jul 2025

|

| German Shorthaired Pointer Dog |

Well, this is not a post about a dog or dogs, but yes, it is about pointers. The nervousness with which I started Week 4 Memory, was justified. One of the instructors explicitly said that the topic of pointers was probably going to be the most challenging topic to be tackled in CS50. malloc() was lingering in my memory as I started this Week 4’s topic.

Prof. Malan brought literal training wheels to class which he threw to the ground to demonstrate things were being escalated i.e. opening the fire hose.

The highlight this week was learning about Hexadecimals (base-16). The shots video on Hexadecimal really brought me to understand the system versus the coverage in the main lecture. It brought the light-bulb moment when I saw the notation and a recall of some computer science literature I have come across , 0xADC et. al.

Learning Hard Topics

I ‘sat’ through the pointers lecture (shots) in order to get it! I was in no rush to get this topic over with, nor rush to the Problem Set to move along the course. I wanted to develop my ability/ resilience to learn difficult stuff. As Doug Lloyd pointed out

pointers take a lot of practice to master. But the benefit we get from their correct use, far outweighs the effort it takes to learn and master them.

Doug’s quick take really exposed the fundamentals of pointers and the topic sunk in. The main consolation was learning about their utility in File I/O. An area which should excite any enthusiast as it avails the programmer power to manipulate files!

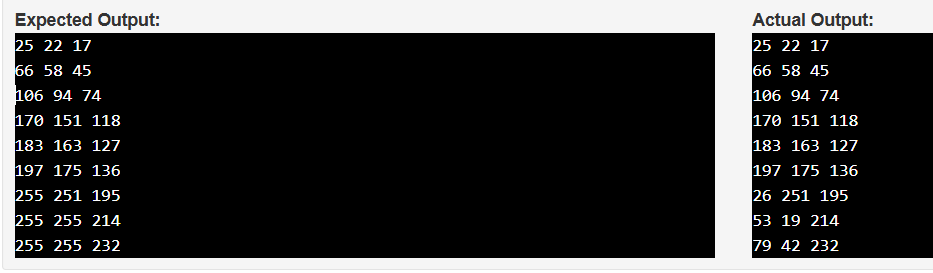

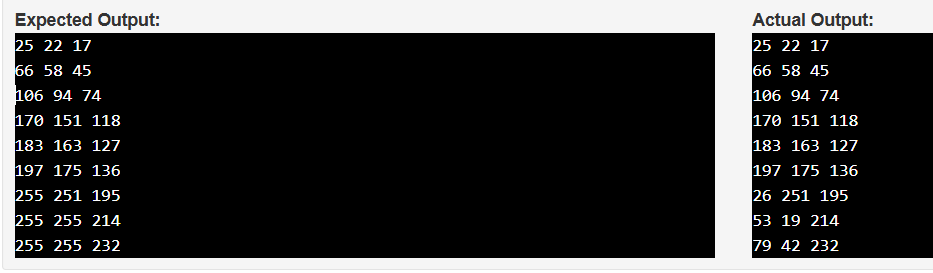

Getting through Problem Set 4 was through small progressively victories, getting through one function at a time. Through it all, I got to understand nested loops and 2 dimensional arrays even better. There were a lot of those with this pset. Victory run, solving an upper limit case for the image manipulation routine, by investigating the output from the program. The correct program control and condition selection was the sticking point. More learning.

|

| Sepia Problems - Pixel Values > 255 |

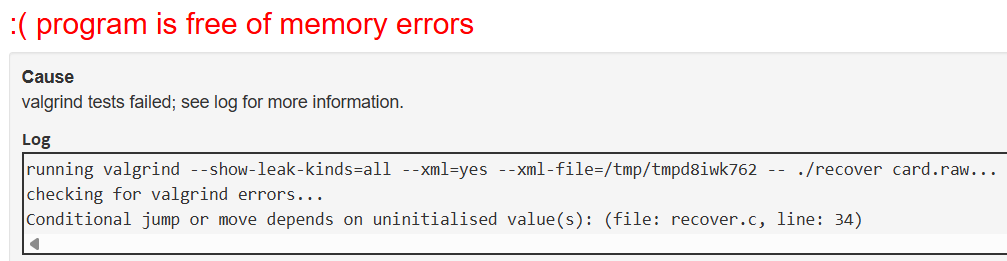

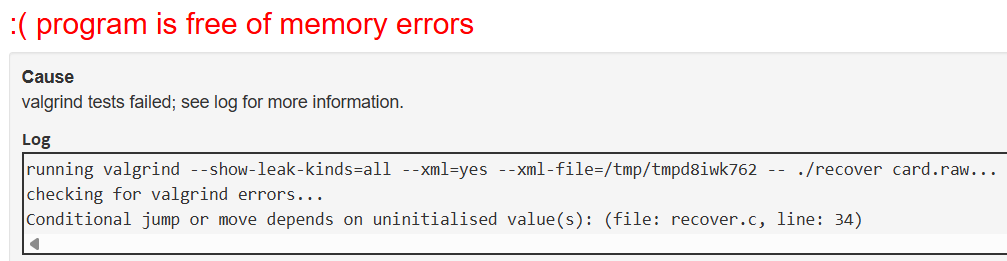

Taking from the lecture, I had opportunity to investigate memory issues when my code was found faulty by the check50, using Valgrind

|

| Memory Issues |

What I learnt About Pointers

- Entirely within the content of CS50, (i’m certain anywhere else,)

Pointers are just addresses . Addresses to memory where variables live.

-

Pointers allow us to pass data back and forth between functions. Overcoming the limitation of local variable scope.

-

A lot of doodling with arrows helps expose the foundational concept of what is happening when dealing with pointers.

- A single pass of the topic might not be sufficient to get what pointers are all about. Several and alternative takes on the subject will most likely bring the point home. In the course there are three!

15 Jul 2025

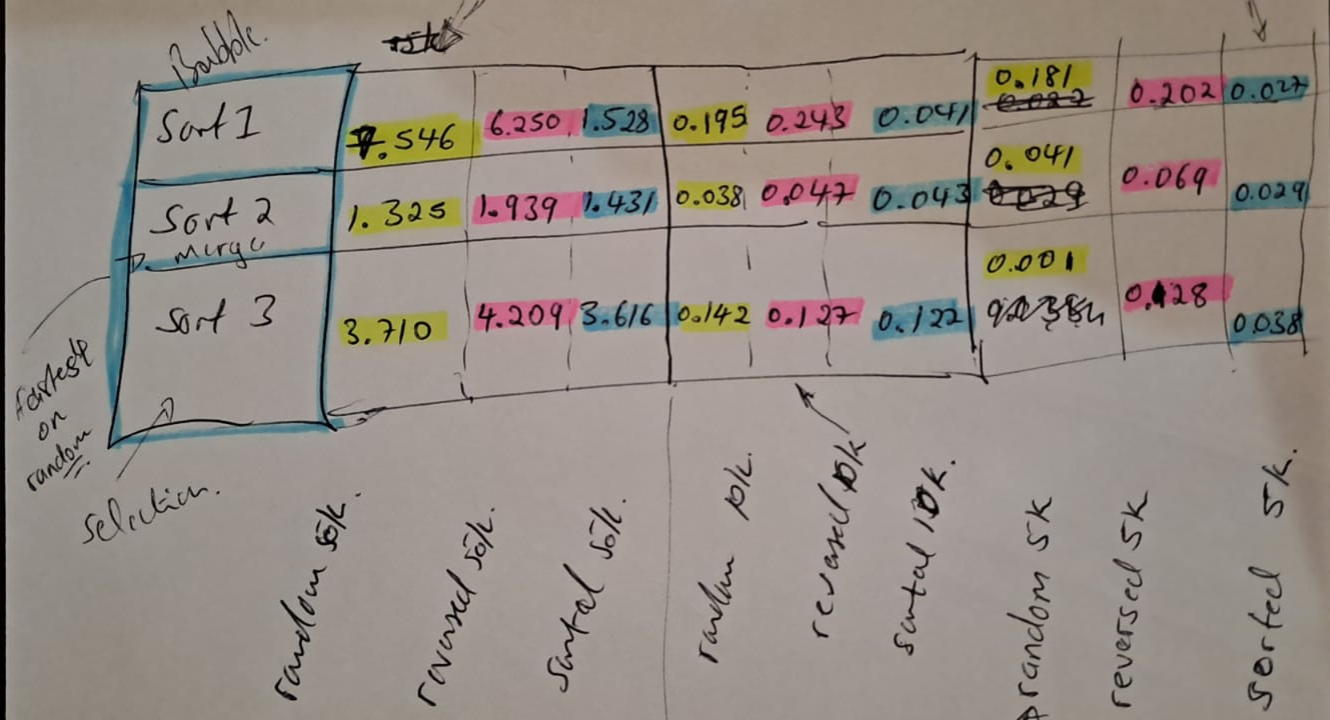

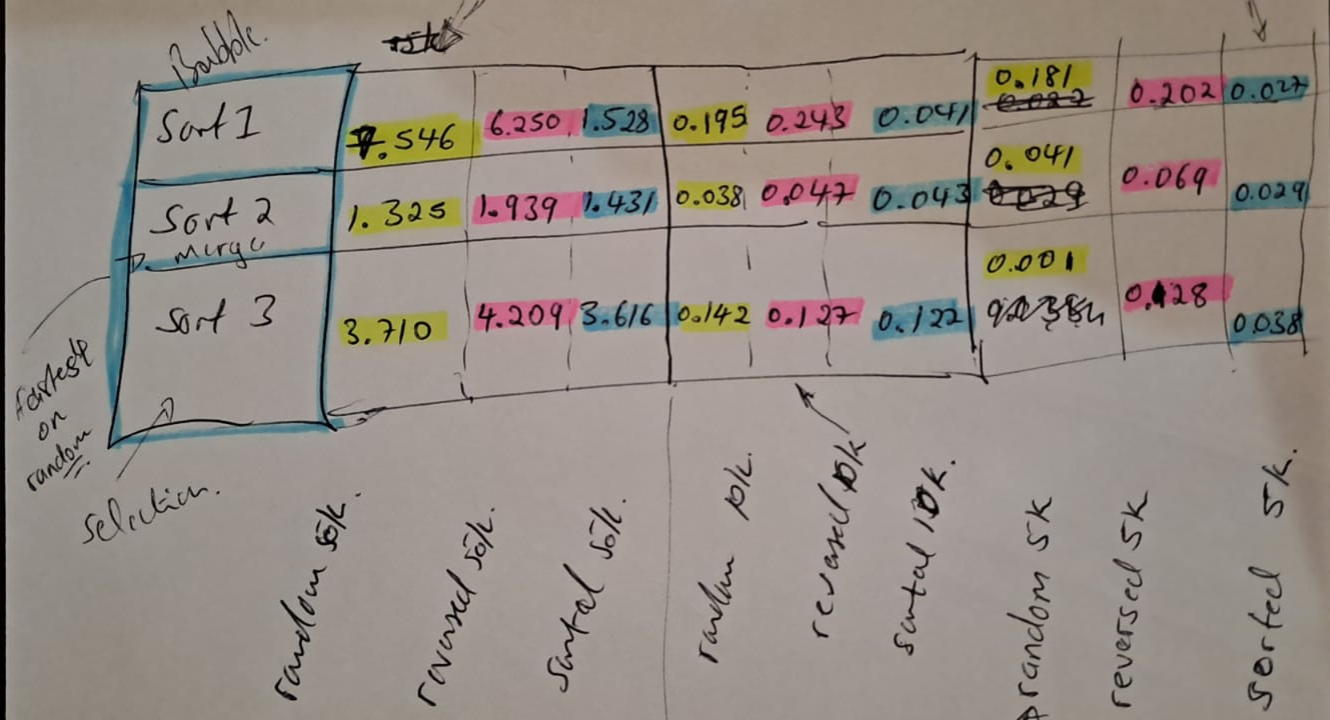

Last week’s Caesar challenge pushed my schedule out by a few days and I had to pick-up pace this week, Week 3. Had the content been entirely new, I would have needed more time on arrays. This week’s focus was on thinking about solving problems better, thinking algorithmic-ally. A end-of class animation comparing Selection Sort, Bubble Sort and Merge Sort was priceless, succinctly illustrating good program design and efficiency in algorithms.

|

| Execution Time |

This week’s content was overally easy going and comprehensible. I could correctly identify and distinguish, first time, the three sorting algorithms by analysing the run-times, sketched on the study note above.

While working through the Problem Set 3, the small wins really kept the morale and drive to continue high. Incorrect use of a comparison operator can be code breaking. Using a debugging technique learnt in the course, viz printing output at certain points of the code, I was able to identify the problem with my code without using the IDE’s Debugging Tools.

Wheels Off . . . Collaborate

-

Problem Set 3, tasks were increasing challenging. With the first two being quick wins and quite a warm up. With the third of the problems, the training wheels where completely-off! One could leverage on the previous task completed and that ws it. Just the first rung of a complex ladder system. Programmatic and algorithmic thinking were scaled a notch. Two dimensional arrays were to be employed. The Runoff task, challenged one’s ability to think in the abstract.

-

For the first time I went out to look for help in a Slack Community, one of the many CS50 communities. It wasn’t long till I had a break and cracked the functions which were to be implemented.

-

Due to pressing work commitments. I have to renage my week on week plan for the course. I will continue on Week 4 in a week’s time. Wow! That was a lot of weeks in a few sentences. Not very good design.

06 Jul 2025

|

| Let’s code. |

This week I saw more and more of that screen above = spend more time in the coding environment. I also had time to go back to Week 1 to work on, and submit an optional challenge, “mario-more”. This gave me an even better understanding of ‘for’ loops. Prof. Malan mentioned something in this week’s lecture that grabbed my attention and spurred me on, on this journey, paraphrased:

“When you’re done with the course, you’ll have this bottom-up understanding of what’s going on. You’ll get techniques and a mindset for solving real world problems.”

Debugging The Way to a Solution

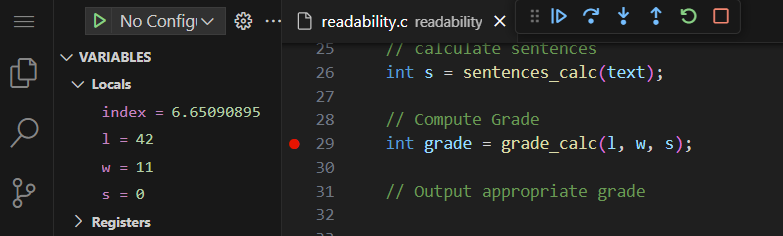

Most fascinating this week was the section of debugging - using CS50’s ‘Debug50’ . I like seeing ‘under-the-hood’. It turned out I had to use this tool extensively for one of the Challenges of the Problem Set. Watching variables change dynamically, albeit slowly, helps one quickly identify issues with the code. I notice with the course resource material (Main Lecture, Section and Shots), concepts are rehashed several times and from various angles, you will eventually ‘get it.’

The theme of Good Program Design kept popping up and code was edited ‘live’ and several approaches demonstrated.

I had some ecstatic moments of glory and self celebration, having coded a word capitaliser from scratch. Through a self imposed coding sprint, I tackled one of the challenges - Readability from start to finish without first looking at the hints and helps that come with the problem specs. Much time was spend with Debug50 as the program passed some and failed other correctness tests. When I eventually looked at the proposed approach, it was satisfying to notice my approach was not that much different.

|

| Popping the hood - Debugging Code with CS50’s Debug50 |

Thinking Programmatically, This Week’s Take-Aways

-

One of the challenges this week, Scrabble, pushed my limits of programmatic thinking. I couldn’t quickly crack the problem and find an approach. I had to go through the solution guide without a ready approach of my own. I learnt that it’s okay to not know and it is all part of developing programmatic thinking muscle. My understanding of arrays improved as I could understand the solution easily, but getting the idea/ approach is what I lacked.

-

Pseudo-coding really helps with the thought process and helps break down the problem into bite-sized parts. You quickly realise where functions can be employed and you’re able to abstract as much as possible. This isolation of ‘small solutions’ makes the problem look much smaller and surmountable.

-

This week the training wheels are still on but the pushes and directions are became fewer and fewer. A very ‘weak’ framework to tackling the problem set is provided. I had a lot of Rubber-ducking, with CS50 Duck Debugger and a lot of Debug50 as well. With the third task of the problem set, it took me a while to notice a variable assignment error which produced partially correct output. It was an exciting time debugging and similarly frustrating since I knew the code was correct, that’s when the Duck really helped.

-

With a ‘correct’ and functioning program at hand I asked the AI to check design, design50. My program worked alright but there we a number of design change suggestions. My programmatic thinking is improving. I should focus as well on improving design.